Anonymity, De-Identification, and the Accuracy of Data

By Professors Michael D. Smith and Jim Waldo

Published August 28, 2023

Courses Mentioned in this Post: Data Privacy and Technology

Series Mentioned in this Post: Harvard On Digital

One privacy-preserving mechanism used in data analytics is to anonymize or de-identify the data. The intuitive idea is that you can preserve the privacy of individuals whose data is being used if you remove information that allows those individuals to be identified. In practice, there is not a single widely-used method for anonymizing data. Different regulations specify requirements to consider a data set to be de-identified and knowing which regulation governs your work and what the requirements are for de-identification is important if you are to comply with the appropriate laws.

These subjects are discussed in greater detail in our course, but here is a quick primer on different kinds of anonymization/de-identification by looking at three different regulations.

Directory Information, Health Data, and HIPAA

An early attempt to protect the privacy of research subjects can be found in the Health Insurance Portability and Accountability Act (HIPAA). That regulation states that a data set will be considered anonymized if the directory information about each of the subjects has been removed. Directory information is such data as name, social security number, or address. There are about 40 fields that are considered to be directory information; if you remove those from your data set, it can be shared without violating the privacy regulation.

While removing directory information will keep you within the rules of the regulation, it will not protect the privacy of the individuals in the data set. This was shown in 1997 when Latanya Sweeney re-identified medical records that had been de-identified in accordance with HIPAA’s privacy regulation, including the record of the then-governor of Massachusetts.

Sweeney’s work led to the notion of a quasi-identifier, which is information about you (e.g., gender or birthdate) that cannot alone directly identify you, but can be combined together and the combination found in another data set. A problem occurs when this second data set (e.g., voter registration databases) contains some of your directory information. By linking quasi-identifiers across data sets, an adversary can re-identify a record in a “de-identified” data set and discover whatever personal information (e.g., medical treatments) was meant to be kept anonymous.

K-Anonymity, Educational data, and FERPA

This notion of a quasi-identifier was used in The Federal Educational Right to Privacy Act (FERPA), a different regulation having to do with sharing educational data within the United States.FERPA specifies that data is de-identified if, for any set of quasi-identifiers that an entity in the data set has, there are at least four other individuals in the data set with the same set of quasi-identifiers. So if a data set contains your gender, birthdate, and zip code (and no other quasi-identifiers), then there needs to be at least four other records in the data set with your same characteristics.

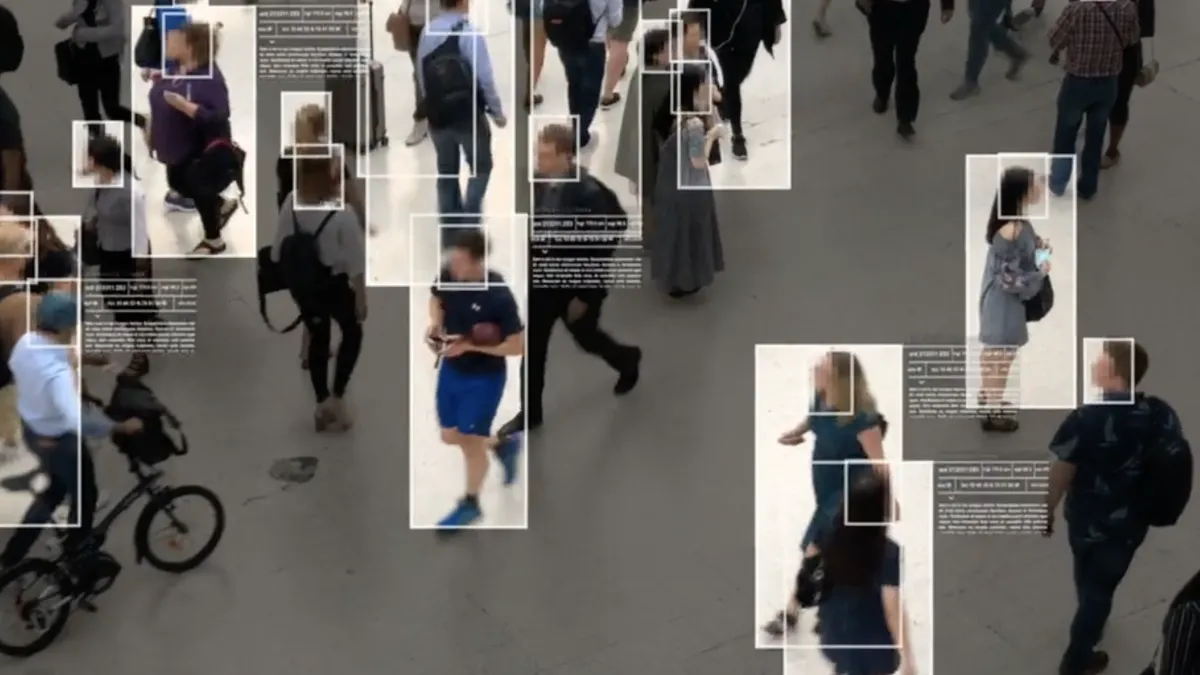

This idea of hiding in a crowd is the basis for k-anonymity (in this case, with k=5). The basis is that if a data set is k-anonymous, an adversary intent on re-identification might be able to narrow your information down to one of k possible records, but they won’t be able to determine which of the k is yours.

K-anonymity is far more privacy preserving than simply removing directory information, and so FERPA is an improvement on HIPAA’s notion of privacy. But k-anonymity is not a panacea. For example, consider what happens when the data set provider poorly chooses the set of quasi-identifiers. To recreate the data-set-linking attack, an adversary simply needs to identify a field in the data set that was not considered a quasi-identifier, but does actually appear in another data set. Since it is hard to imagine what data appears in other data sets, the privacy guarantees afforded by k-anonymity are fragile.

Even when a data provider chooses the set of quasi-identifiers well, making a data set k-anonymous can change its statistical properties, which can lead to conclusions that are not supported by the original data. The reasons for this are subtle, but the end result is that data providers are faced with a serious dilemma: privilege the anonymity of individuals or the accuracy of statistical analyses.

Differential Privacy, GDPR, and the U.S. Census

The European Union has taken a different approach to privacy protection in the General Data Protection Regulation (GDPR). Rather than stating how a data set is to be de-identified, the regulation requires that de-identified data sets are those in which individuals “cannot be re-identified by any means reasonably likely to be used.” This characterization doesn’t say how the de-identification needs to be done, but rather how strong the resulting privacy protection needs to be.

The U.S. Census Bureau has a similar requirement on data that they collect. This has led them to adopt the technique of differential privacy as their privacy protection mechanism. This is a very different approach when compared to the previous two techniques. Gone is the goal of distributing a de-identified data set, to which anyone can have access. Instead, differential privacy keeps the data set in a secure location and provides a method for researchers to ask questions of the data set. With this centralized point of control, the data provider can: (1) restrict the types of queries allowed; (2) add statistical noise to the queries it does answer; and (3) track the total amount of information released across all queries. Done correctly, these three mechanisms together can mathematically ensure that the individuals in the data set cannot be re-identified.

While the mathematical guarantees of differential privacy are reassuring, implementation of systems using these techniques has proven to be surprisingly difficult. In addition, like k-anonymity, differential privacy introduces statistical variation in the data that can alter the results of a data analysis. The dilemma between how much to privilege personal privacy over the generation of new knowledge remains.

If nothing else, the different approaches in these different regulations show that the idea of anonymity might be seductive as a mechanism to insure privacy, but it is a subtle and difficult idea to put into practice. Knowing what notion of anonymity is required by regulation is necessary for compliance, but may not be sufficient for the ethical obligations you have as a data steward. Thinking critically about your data and the mechanisms you are using to protect the identity of the data subjects is always needed if you want more than just compliance.

Interested in learning more about trending topics in data privacy from Mike and Jim? Visit the Harvard Online blog page for their take on ChatGPT, TikTok, and password Security, or apply to join the next cohort of their course Data Privacy and Technology.

Content is provided for informational purposes only and does not constitute legal advice.

Related ArticlesJim and Mike on Password Security and LastPass Image

Professor Dustin Tingley Explains How Data Science Is For Everyone Image

|